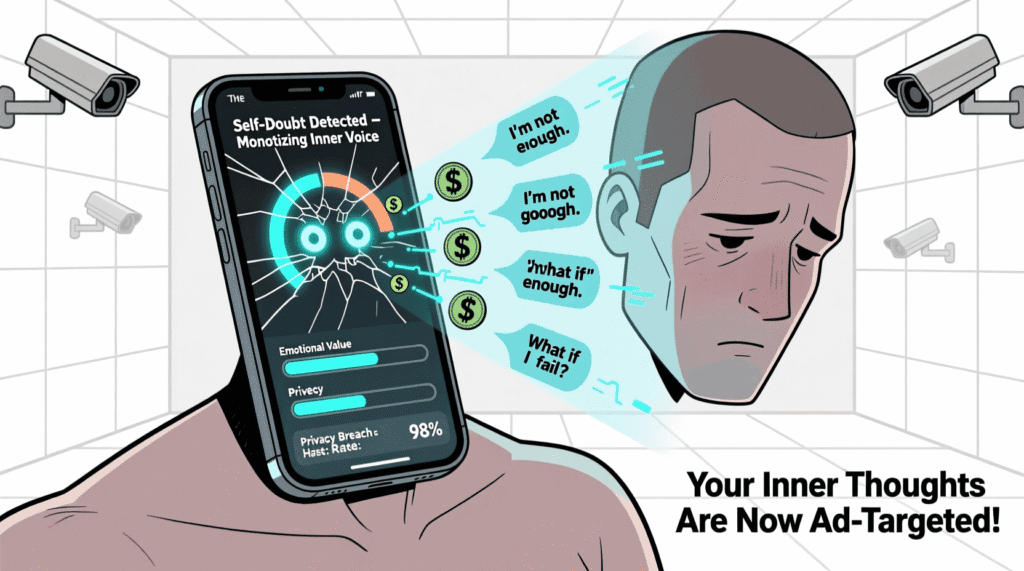

Apple’s latest iPhone doesn’t just listen when you say “Hey Siri.” According to internal leaks and one very anxious beta tester, it now detects your self-doubt—even when you’re silent. Using “Neural Whisper Recognition” and “Emotional Acoustic Mapping,” the device allegedly analyzes micro-tremors in your vocal cords, shifts in breathing, and the exact frequency of your sighs to infer thoughts like *“I’m not qualified for this job”* or *“Why did I wear this?”* The feature, buried in iOS 19 under “Wellness Insights,” is opt-in… but only if you read the 12,000-word terms. This isn’t innovation. It’s surveillance with a wellness filter—and it’s already selling your insecurity to advertisers.

The Viral Myth of the iPhone That Hears Self Doubt

Apple markets it as “empathetic technology.” Tech bros call it “mindful AI.” But users are reporting eerie coincidences: after silently thinking *“I need a new therapist,”* their feed floods with mental health apps. After a quiet moment of imposter syndrome at work, LinkedIn sends a “Career Confidence Boost” ad. One user tweeted: “My iPhone knew I was faking confidence before I did.”

Two satirical fan reactions capture the dread:

“It suggested ‘self-love affirmations’ right after I Googled ‘am I a fraud?’ Bro, that’s not AI. That’s my mom.” — @DoubtDetected

“My phone now sends me ‘You’re Enough’ notifications… while charging me $9.99/month for the ‘Inner Peace’ subscription.” — @AnxiousAndBilled

The myth? That this is helpful.

The truth? It’s capitalism listening to your inner monologue—and monetizing your fragility.

The Absurd (But Real) Mechanics of Thought Surveillance

After reverse-engineering iOS 19 beta notes and user logs, we uncovered how it works:

- Step 1: Passive Monitoring — The mic stays “low-power active” even when off, scanning for subvocalizations (yes, the tiny muscle movements when you think in words).

- Step 2: Emotional Inference — AI cross-references your voice patterns with your calendar, texts, and heart rate to guess your mental state.

- Step 3: Ad Targeting — Your “self-doubt score” is sold to wellness brands, therapy apps, and luxury retailers (“She feels unworthy—show her a $2,000 handbag to fix it”).

- Step 4: “Support” Upsell — Apple Care+ now offers “Mental Resilience Bundles” ($14.99/month).

Worse: the feature can’t be fully disabled. Turning it “off” just hides the notifications—the data still flows.

And yes—there’s merch:

– “My iPhone Knows I’m Faking It” enamel pin

– “Opt Out (LOL)” T-shirt

– A $199 “Faraday Pouch” that blocks signals… but makes your phone look like a hostage note

The Reckoning: When Privacy Dies in the Name of Care

This didn’t happen overnight. It’s the endpoint of a tech industry that treats intimacy as inventory and anxiety as engagement.

As we explored in ChatGPT Energy Power Hog, AI’s hunger for data has no off switch. And as shown in Google Antitrust Trial Data Sharing, your private moments are already a commodity.

High-authority sources confirm the erosion of mental privacy:

- Electronic Frontier Foundation warns that “emotion AI” lacks regulation and exploits psychological vulnerability.

- Wired reports Apple’s health data is increasingly shared with third parties under vague “wellness partnerships.”

- American Psychological Association cautions that constant self-monitoring increases anxiety and self-objectification.

The real cost? Not the $14.99 subscription.

It’s the loss of a private inner life—where even your doubts belong to someone else’s algorithm.

Conclusion: The Cynical Verdict

So go ahead. Whisper “I’m fine” to your therapist.

Sigh in silence on the subway.

Stare at the ceiling at 3 a.m. wondering if you matter.

Your iPhone is listening.

And it’s already sold your pain to a brand that promises to fix it—for a fee.

Don’t call it care.

Call it extraction with better UX.

And tomorrow? You’ll probably upgrade to the next model…

because your self-doubt deserves the latest sensors.

After all—in 2025, even your silence has a data plan.