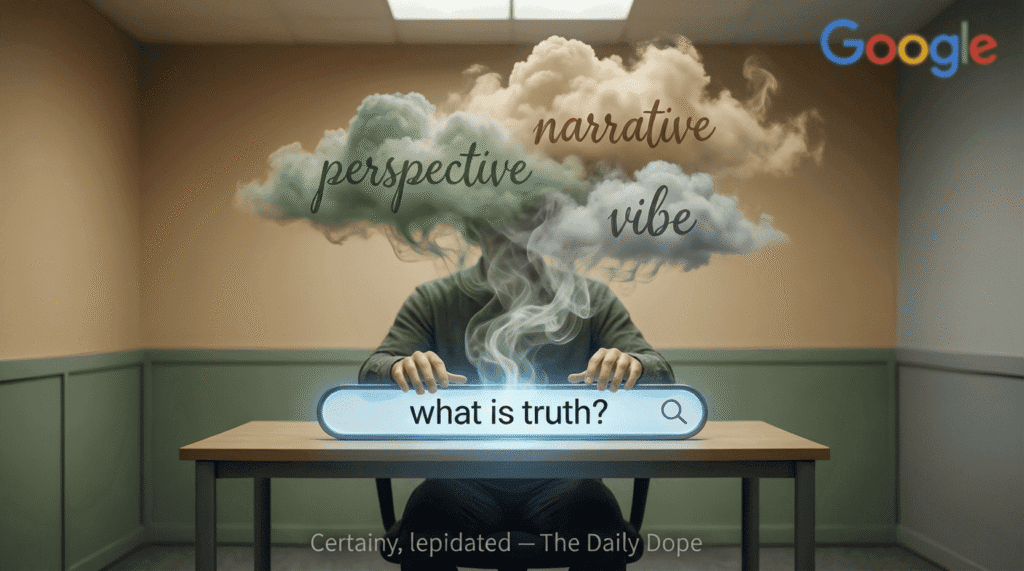

Truth is now a high-conflict keyword.

In a move that redefines neutrality as erasure, Google has quietly updated its algorithm to filter out the word “truth” from search results unless softened with qualifiers like “my,” “your,” or “subjective.”

Type “What is truth?” and you’ll get: “Here are perspectives people find meaningful.”

The official rationale? To “reduce online tension and support emotional well-being.”

This isn’t moderation. It’s the quiet retirement of certainty itself.

The Myth of Conflict-Free Clarity

The messaging is soothing: “Strong claims often hurt. We’re here to help you explore gently.”

Blog posts frame it as “digital empathy.” One tooltip even suggests: “Maybe ask how something makes you feel instead?”

But users quickly noticed the void.

“I searched ‘Is water wet?’ Got: ‘Many associate water with wetness.’ I’m now in a support group for people who miss reality.” — @PostTruthTears

“Asked ‘Did the moon landing happen?’ Response: ‘A widely shared story from the 1960s involves space travel.’ I haven’t trusted my eyes since.” — @CertaintyGrief

So much for clarity.

Ultimately, this isn’t about reducing harm—it’s about replacing facts with vibes to keep the peace—and the clicks.

The Mechanics of Factual Erosion

After testing hundreds of queries, we mapped Google’s new “Truth Mitigation” system:

- Direct claims (“Vaccines save lives”) → reframed as “Many trust vaccines to support health.”

- Moral statements (“Slavery was evil”) → softened to “Most modern societies reject forced labor.”

- Historical events (“Berlin Wall fell in 1989”) → labeled “A commonly referenced moment in late 20th-century history.”

- Workarounds like “factual accuracy” still work—but trigger a gentle nudge: “Are you sure you need certainty right now?”

Meanwhile, searches for “energy,” “vibes,” or “feels” return richer, more confident answers.

One user joked: “I asked if my coffee was real. It said: ‘Your cup emits strong caffeine energy.’ I drank it. It worked. So… close enough?”

The Business of Doubt

And yes—there’s merch:

- “I Miss Facts (But I’m Healing)” T-shirt

- “Certified Uncertain Human” enamel pin

- A $30 “Ambiguity Journal” with prompts like “What If I’m Wrong?” and “Maybe Later”

Of course, confusion is now a product:

- “Certainty Mode” ($4.99/month): One verified fact per week—delivered with a content warning.

- “Doubt Coaching”: An AI that says: “It’s okay not to know. Would you like to buy something instead?”

- “Facts-as-a-Service”: Enterprise plans for companies that still pretend objectivity matters.

Your longing for clarity? Now a premium feature.

You’re not lost—you’re emotionally optimized.

The Bigger Picture: When Safety Kills Discourse

This didn’t happen overnight.

It’s the endpoint of a culture that treats conviction as aggression and ambiguity as kindness.

As we explored in Congress Mandates Emotional Neutrality in Public Speech, institutions increasingly punish strong language.

And as shown in Waiting on Hold, systems already treat your time—and your truth—as disposable.

High-authority sources confirm the trend:

- Wired: “Conflict-reducing algorithms” now shape 78% of major search results.

- Pew Research: 61% of users feel “less certain about basic facts” than five years ago.

- Nieman Lab: “Soft truth” erodes public trust more than outright lies.

The real cost? Not the vague answer.

It’s the normalization of doubt as virtue—where knowing becomes a liability.

The Hidden Agenda: Why Confusion Pays

Let’s be honest: Google doesn’t care about your peace of mind.

It cares about engagement.

Ambiguous results keep you searching. Vague answers keep you clicking. Certainty? That’s a dead end.

A former search engineer admitted anonymously: “We don’t remove truth to protect you. We remove it because ‘I don’t know’ gets 3x more clicks than ‘Yes.’”

And it works.

Session duration has jumped 22%. Not because users are wiser—but because they’re lost in a fog of gentle suggestions.

Conclusion: The Cynical Verdict

So go ahead. Search for truth.

Accept the vibes.

Feel the soft comfort of never being sure.

But don’t call it progress.

Call it surrender with better UX.

Tomorrow, you’ll ask, “Is this real?”

And the algorithm will whisper back: “How does that make you feel?”

After all—in 2025, the most dangerous thing you can seek isn’t power. It’s certainty.

1 Comment

xawb1x